RAG: What is it, and why is it getting so much attention?

By Ali Naqvi

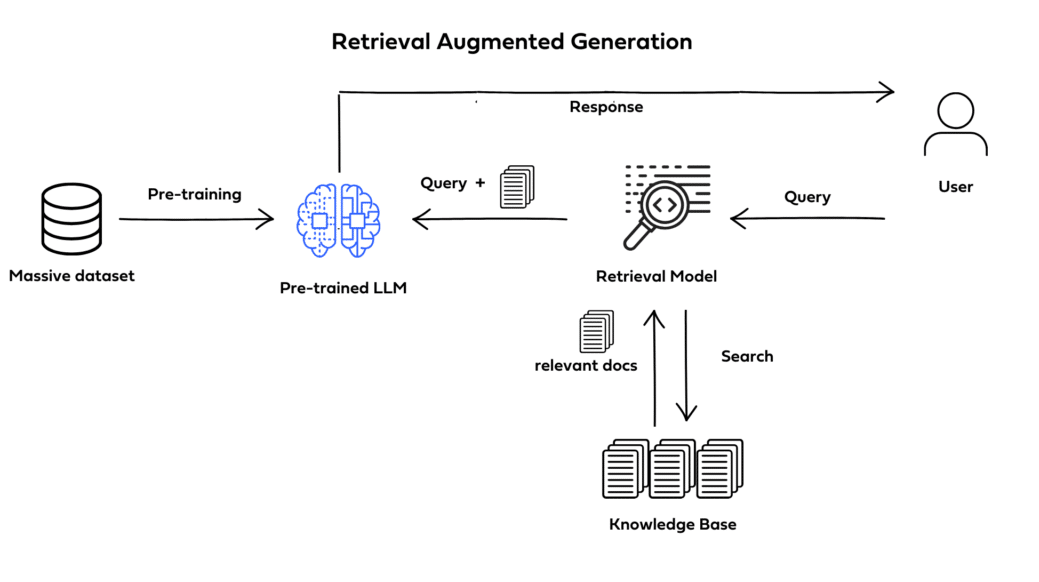

In the ever-changing world of AI, Retrieval-Augmented Generation (RAG) has become a key technique to supercharge large language models (LLMs). By combining external knowledge retrieval with generative models, RAG produces contextually rich and accurate answers. This post will cover the use cases of RAG, where to use it, where not to, the latest trends and tools to build RAG applications and compare them to fine-tuning methods.

What is RAG

RAG combines the power of information retrieval systems with generative AI models. In this framework, a retriever component searches external knowledge bases to fetch relevant information, which is then used by a generator model to produce informed and contextually relevant answers. This is useful in domains where information is up-to-date or domain-specific and not in the training data of the generative model.

Where to use RAG

RAG is good for:

1.

Dynamic or Changing Information: Applications like news summarization, financial market analysis, or real-time customer support benefit from RAG’s ability to fetch information from external sources.

2.

Domain-Specific Knowledge: Fields like healthcare, Finance, or technical support where exact and specific information is required can use RAG to fetch and generate answers from authoritative external data.

3.

Resource Savings: By using external knowledge bases, RAG reduces the need to retrain models extensively and saves computational resources and time.

4.

Better Accuracy and Trust: RAG solves the problem of AI hallucinations (where models generate plausible but incorrect information) by anchoring answers in verifiable external data.

Where not to use RAG

While RAG is helpful, it’s not good for:

1.

Low Latency: The retrieval process can introduce latency, so RAG is not suitable for applications that require instant answers.

2.

No robust external knowledge bases: If there are no good external data sources available RAG doesn’t work well.

3.

Offline or restricted environments: RAG needs access to external data, so in environments with no internet or restricted data access, it’s tough to use.

4.

Sensitive data: In cases where highly confidential information is involved, integrating external data sources can be a security and privacy risk.

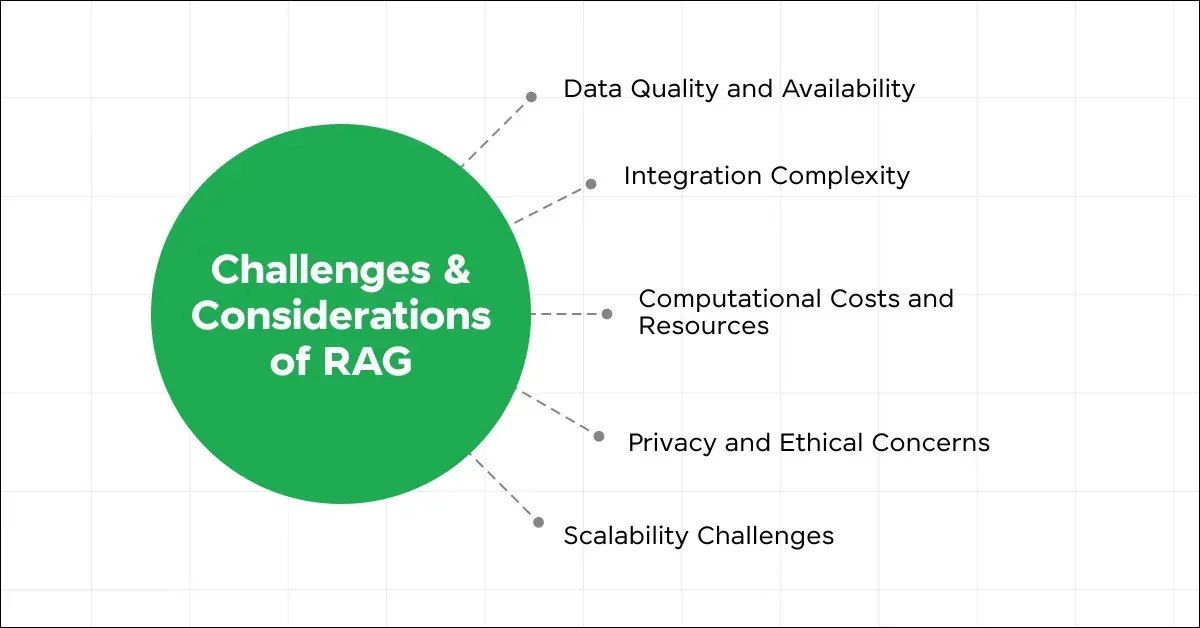

Latest Trends and research in RAG

Recent RAG research has focused on accuracy, speed, and context for complex knowledge-intensive tasks. Key advances are below:

1. Speculative RAG: This framework has a two-step process with a "RAG specialist" and a "RAG generalist". The specialist is a smaller language model that generates multiple drafts from the retrieved documents, and the generalist is a larger model that verifies these drafts and selects the most accurate one. This speeds up processing, reduces document review, and achieves significant accuracy gains across multiple benchmarks.

2.

Graph-RAG: RAG for non-text databases. Graph-RAG uses graph-structured data to integrate relational and structural knowledge that improves factual accuracy and credibility. This is useful in areas that require information connected to each other, like knowledge bases for medical or technical fields. Graph-RAG improves upon traditional RAG by using graph-based indexing and retrieval and is particularly useful in scenarios where relational data is crucial to context.

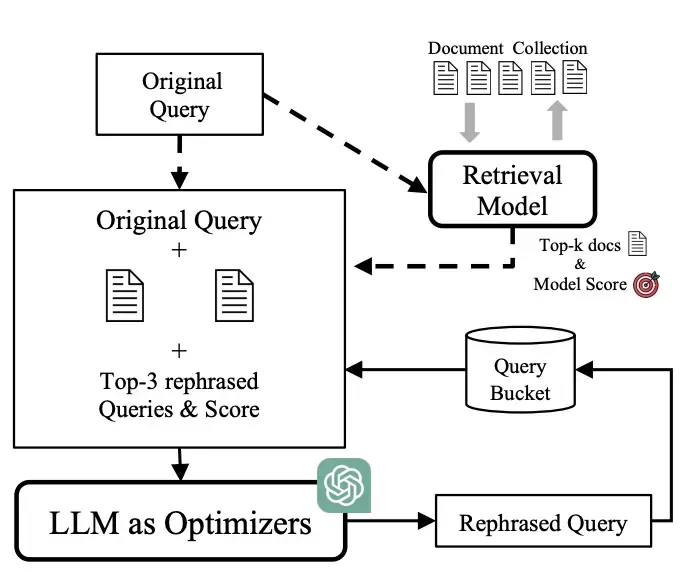

3.

RQ-RAG (Refined Query RAG): To refine and tailor retrieved documents to queries, RQ-RAG introduces query rewriting, decomposition, and disambiguation techniques. This improves retrieval quality and makes the model more interpretable by ensuring the retrieved information is relevant to the context. This is especially useful in complex multi-hop question-answering tasks.

These innovations highlight RAG's evolution toward creating more specialized and efficient retrieval mechanisms, which are especially useful in applications demanding high factual accuracy and contextual depth. The focus on task-specific refinements and the integration of structured data through Graph-RAG showcase RAG's expanding utility across various domains.

Tools for RAG Apps

Here are some tools and frameworks to build RAG apps:

1.

LlamaIndex: A data framework that connects to custom data sources and LLMs to ingest, index, and query data for RAG apps. LlamaIndex provides abstractions for all the stages of building a RAG app so you can connect to different data sources and retrieval strategies.

2.

Ollama: An open-source platform to run powerful LLMs locally on your machine, giving you more control and flexibility in your AI projects. Ollama allows you to deploy models like Llama 3.1, so you can build RAG apps without relying on external APIs.

3.

LangChain: A tool to chain LLM prompts with external retrieval for complex RAG workflows. LangChain allows us to connect to different data sources and retrieval methods to make RAG apps more flexible and scalable.

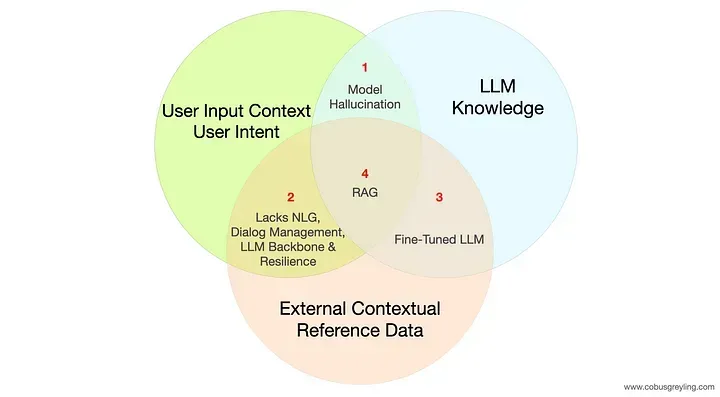

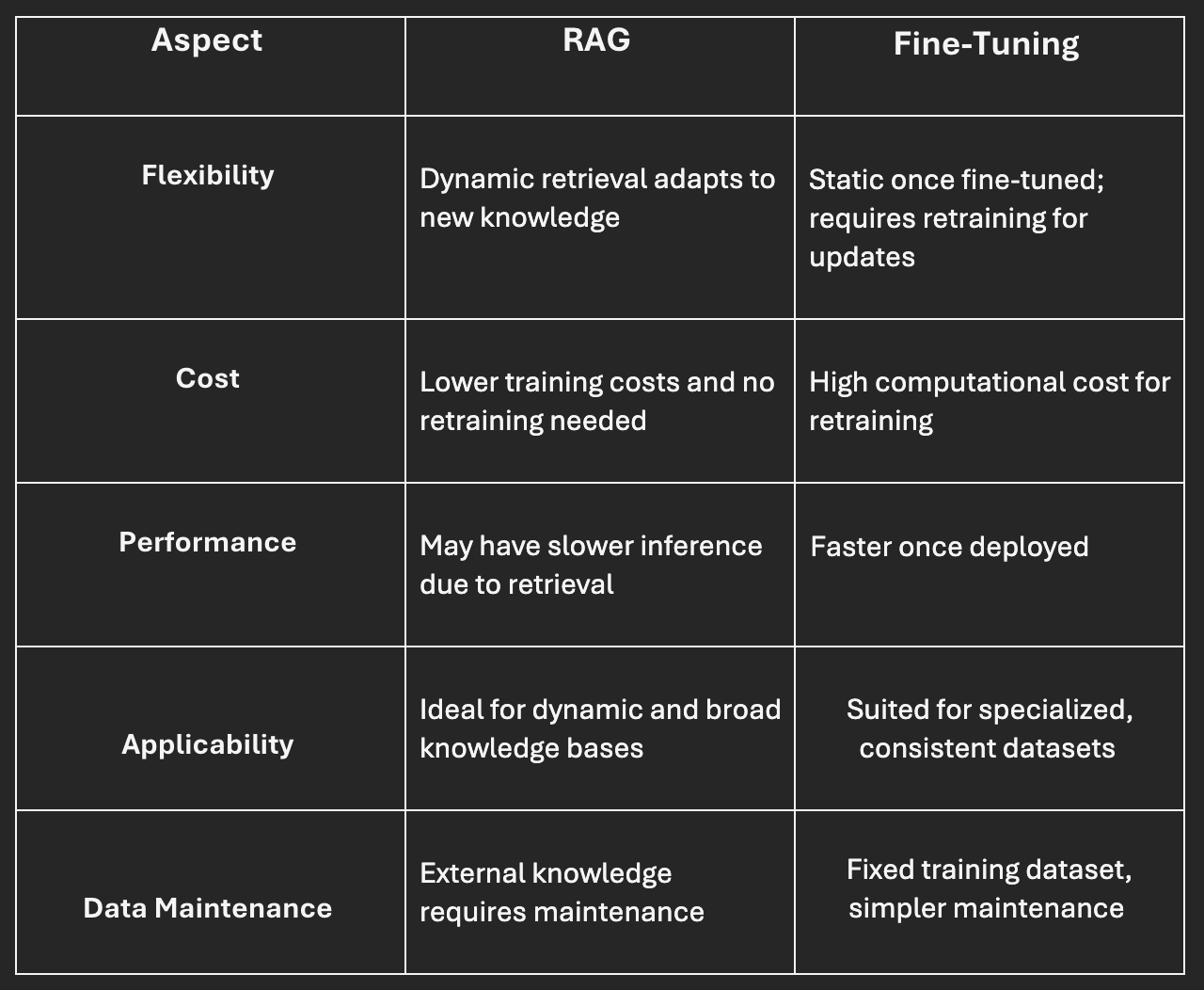

Comparison to Fine-Tuning

When to Choose RAG Over Fine-Tuning

1. Real Time, Evolving Data

RAG is better if your app uses real-time or changing data (e.g., financial analysis or live event tracking).

2. Domain Versatility

RAG is great for apps that need multiple domain knowledge without having to fine-tune models for each domain.

3.

Cost and Resource Constraints

If retraining large models is not feasible due to cost or time, then RAG is the more cost-effective option.

4.

Explainability and Source Traceability

RAG’s ability to cite external sources makes it better for industries like healthcare and finance, where data verification is important.

Conclusion

Retrieval-Augmented Generation (RAG) is a powerful tool that bridges the gap between generative AI and real-time knowledge retrieval. It’s not for every use case, but RAG’s flexibility and cost-effectiveness make it a good option for apps that need up-to-date, domain-specific, or highly accurate responses.