Hallucinations in Large Language Models: Why Do They Occur?

By Ali Naqvi

Large Language Models (LLMs) have changed the game for natural language processing, enabling all sorts of applications, from chatbots to code generation. But they’re not perfect. One of the biggest problems is hallucinations. Hallucinations happen when models produce content that sounds correct, but it isn’t.

Hallucinations happen for several reasons:

Training Data Limitations: Models trained on massive datasets inherit the inaccuracies or biases in the data. This can lead to error replication and false patterns.

Ambiguous Prompts: Prompts that are not clear and put together correctly can produce irrelevant or incorrect output.

Overgeneralization: LLMs will infer patterns that don’t exist and produce content that looks valid but has no factual basis. There will be no data backing the inference.

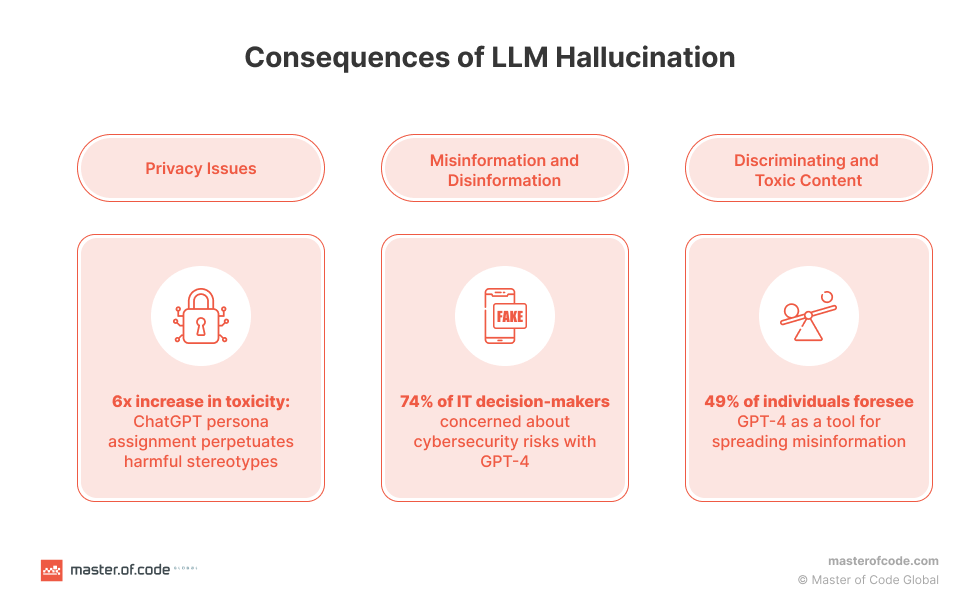

Hallucinations can lead to simple misinformation to severe errors in critical applications like healthcare, finance and cybersecurity.

How to Fix Hallucinations

Several techniques have been developed to address hallucinations in LLM output.

1.

Retrieval-Augmented Generation (RAG)

RAG is an excellent technique that anchors LLM output by combining retrieval mechanisms with generative models. Instead of relying on the model’s training data, RAG fetches relevant documents or knowledge in real-time to provide a factual basis for the generated content. In my last blog, “RAG: What is it? Why is everyone talking about it?”

I broke down RAG and how it can ground generative models in real-time retrieved data. Tools like an advanced version of RAG called Graph RAG use structured knowledge graphs to add another layer of factual grounding and multi-hop reasoning.

Using this technique, LLMs can cut hallucinations in half in complex applications like cybersecurity.

2. Fine-tuning and Domain-Specific Tuning

Fine-tuning models on domain-specific datasets helps align LLM output with domain-specific knowledge. Techniques like Parameter-Efficient Fine-Tuning (PEFT), which allows fine-tuning even with limited data, were discussed in detail in my previous article, "Fine-Tuning: Taking AI Models to the Next Level."

Fine-tuning is the key to optimizing LLMs, and PEFT is the way to do it. These methods directly reduce hallucinations by aligning outputs with accurate, domain-specific data.

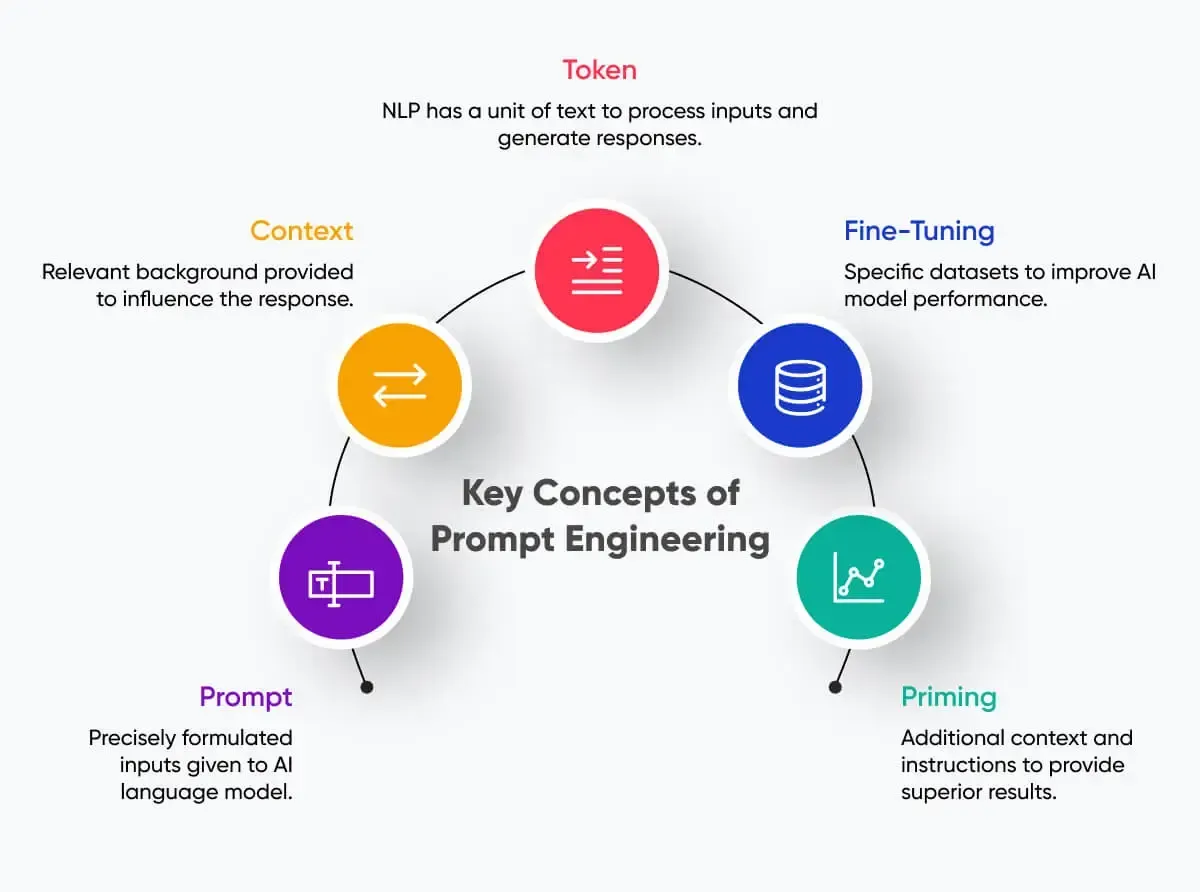

3. Prompt Engineering

Tuning the prompt to be precise and detailed ensures the model understands the context and intent of the query. Prompt tuning and iterative refinement can make output much more reliable. You must provide specific instructions in the prompt using structured examples to guide the model’s behavior and Implement feedback loops to refine prompts iteratively. I will have a blog discussion of this in more detail.

4. Decoding Strategies

Adjusting text generation techniques helps to control output randomness and creativity, finding the balance between accuracy and diversity.

Examples:

Constrained Decoding: Limiting output to specific vocabularies or structures.

Temperature Sampling: Regulating the model’s randomness to reduce overly creative or incorrect responses.

Summary

Hallucinations are one of the biggest obstacles to deploying reliable LLMs. By using the techniques discussed, we can cut hallucinations in half. As AI technology improves, the possibilities for reliable AI get bigger and bigger. Reducing hallucinations is not just a technical requirement—it’s a path to AI that users can trust for real-world tasks like cybersecurity.