Mastering Fine-Tuning:

Taking AI Models to the Next Level

By Ali Naqvi

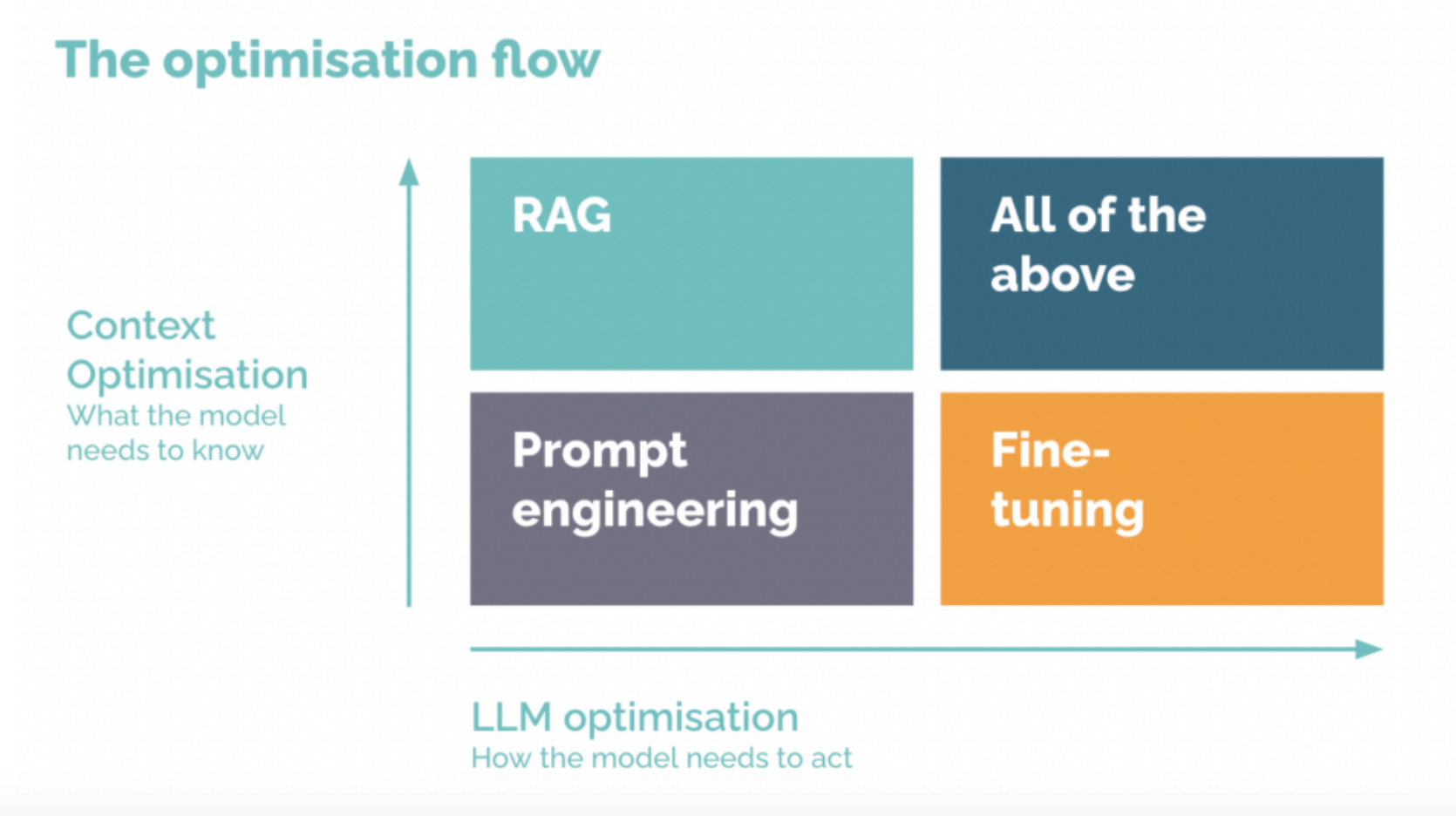

In my last blog on the evolution of application security with AI technologies, I touched on fine-tuning. Now let’s get into the details. We’ll cover the benefits, the challenges and how to apply it across different domains.

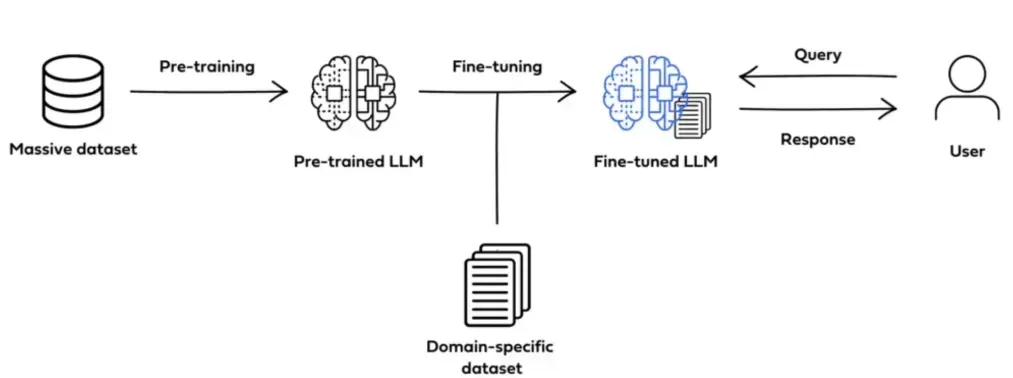

Fine-tuning is the process of adapting pre-trained Large Language Models (LLMs) to perform exceptionally on a specific task. By updating a model’s parameters with a smaller task-specific dataset, we leverage the LLM’s foundational knowledge and enhance its capabilities without retraining the entire model. This is useful for tasks like text classification, sentiment analysis, question-answering, code generation and translation.

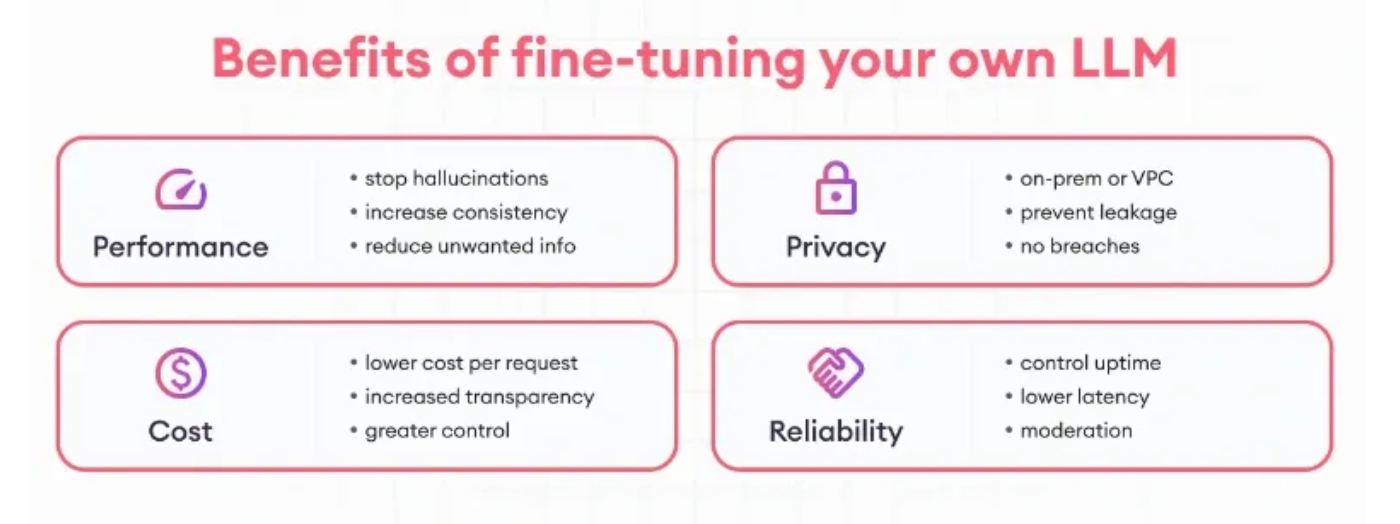

The Perks of Fine-Tuning:

Enhanced Accuracy: Tailoring a pre-trained LLM to a specific domain makes it more accurate. For example, fine-tuning an LLM with a vulnerability database makes a security application more effective in detecting code flaws.

Resource Efficiency: By using existing models, fine-tuning reduces data requirements and computational resources. No need for huge datasets when you can build upon what’s already there.

Improved Generalization: Fine-tuning refines the model’s ability to generalize beyond its original training data, making it more adaptable to new unseen scenarios.

Faster Deployment: With reduced training times, fine-tuning gets your AI solutions to market faster to keep up with industry pace.

Customization: Add unique features to your model, like understanding industry specific terminology or adopting a particular communication style.

Methods of Fine-Tuning:

Unsupervised Fine-Tuning: Adapt the model using large amounts of unlabeled text from your domain to enhance its understanding of specific language, terminology and style.

Supervised Fine-Tuning (SFT): Train the model on a labeled dataset relevant to your task, refine its parameters based on input-output pairs that demonstrate the desired behavior.

Instruction Fine-Tuning via Prompt Engineering: Use carefully designed prompts to guide the model’s behavior, especially useful when explicit instructions are required. This method reduces the need for large-labeled datasets.

Step-by-Step Fine-Tuning Process:

Data Collection and Preparation:

Gather Data:

Collect data from CSV files, web pages, databases or cloud storage.

Clean the Data: Ensure data is error free, inconsistent and duplicate free so the model doesn’t learn inaccuracies.

Balance the Dataset: Check for class imbalances to avoid biases and ensure the model performs well on all categories.

Split the Data: Divide your dataset into training and validation sets (e.g., 80/20 split) to evaluate performance and prevent overfitting.

Model Selection:

Align with the Task: Choose a pre-trained model that matches your use case.

Consider Model Size: Balance performance with computational resources; bigger models perform better but require more power.

Open-Source vs. Commercial: Decide between models like LLaMa 2 for customization or GPT-3.5 for ease of deployment.

Industry-specific model Examples:

Med-PaLM 2: Suitable for medical applications with medical datasets.

FinGPT: For financial industry with training on financial news and social media data.

Parameter Adjustments & Training Configuration:

Learning Rate: Set the learning rate to ensure stable and efficient training.

Batch Size: Choose the batch size that balances training speed with memory limitations.

Number of Epochs: Determine the number of epochs to avoid underfitting or overfitting.

PEFT Techniques: Use methods like LoRA or Adapters to reduce computational load and memory usage.

Evaluation and Testing:

Validation:

Test the model on validation sets to monitor performance and make adjustments.

Metrics Selection: Use relevant metrics that align with your task to measure model performance.

Applications that benefit from Fine-Tuning:

Advanced Q&A Systems: Fine-tuning helps models to handle complex, commonsense questions—perfect for sophisticated customer support chatbots.

Application Security: Training models with vulnerability database helps them to detect specific types of code vulnerabilities.

Financial Forecasting: Fine-tuning models to improve out-of-distribution generalization helps in accurate stock analysis and market predictions.

Challenges and How to overcome them:

Catastrophic Forgetting:

Problem:

The model may lose pre-trained knowledge when fine-tuned aggressively.

Solution:

Use PEFT methods to update only specific parameters and preserve the original knowledge base.

Computational Costs:

Problem:

Fine-tuning large models requires significant computational resources.

Solution:

Use efficient training techniques, cloud-based GPUs or smaller models that meet performance requirements.

Memory Constraints:

Problem:

Limited GPU memory hinders fine-tuning of large models.

Solution:

Use memory optimization techniques like quantization, activation checkpointing, and memory-efficient optimizers.

Techniques to address challenges:

Adapters and LoRA:

Add modules or matrices that change the model without changing all parameters.

Quantization:

Compress the model to reduce memory and speed up computation.

PEFT Methods:

Focus on changing a subset of parameters to make fine-tuning more efficient.

Tools and Frameworks:

Hugging Face Transformers:

Most popular for fine-tuning NLP models with great support and documentation.

PyTorch and TensorFlow:

Robust platforms for implementing and customizing fine-tuning.

Hyperparameter Optimization Tools:

Use Optuna to automate and optimize hyperparameters.

The Future:

With few-shot and zero-shot learning, the fine-tuning landscape is changing. Models will soon require no examples or minimal examples to do new tasks, and AI will have even more applications. But fine-tuning is still an essential skill to make AI models work for you.

Conclusion:

Fine-tuning serves as a fundamental practice in customizing AI models for specific tasks. By adjusting pre-trained LLMs with domain-specific data, we achieve higher performance, more efficient resource usage, and greater adaptability. Despite challenges like catastrophic forgetting, computational demands, and memory limitations, the strategies discussed—such as PEFT methods, adapters, and quantization—provide effective ways to overcome these hurdles. As AI technology progresses, honing fine-tuning skills will remain crucial for professionals aiming to expand the possibilities within their fields.

References: